Exploratory Data Analysis.

Data is big. We dig it.

How can we discover novel insights from data?

How can we learn inherently interpretable models?

How can we draw reliable causal conclusions?

That's exactly what we develop theory and algorithms for.

Two papers at ICLR

Viva Rio! Nils, Matthias, and Sascha together have two papers accepted at the 2026 International Conference on Representation Learning (ICLR). Nils will explain that flatness of the Hessian does imply local but, contrary to common belief, not global adversarial robustness. Matthias, and Sascha will present xMM for explainable mixture models, in which they jointly learn the mixture and the rules that characterize which points are part of what component. Great work, guys!

Two papers at AAAI

Lénaïg, Aleena, Osman, and Joscha together have two papers accepted for oral presentation at the 2026 AAAI International Conference on Artificial Intelligence (AAAI). Aleena, Joscha and Osman will present Seqret for discovering rules from event sequence data. Lénaïg and Joscha will present Niagara, the first method for determining causality from event sequence data with durations. Congratulations to all!

Luis starts as a PhD student

Warm welcome to Luis Paulus as a new PhD student in the EDA group! Luis finished his MSc thesis with us last year on the topic of discovering good rule sets using continuous optimization. He is now starting his PhD with us through the RTG on Neuro-Explicit Models. Luis is interested in neural methods, in patterns, in explainability, and in particular, methods that work in the real world. Welcome, Luis!

David wins the Dr. Eduard Martin Dissertation Award

On Thursday October 16th, David Kaltenpoth was awarded the Dr. Eduard Martin Dissertation Award for his Ph.D. thesis titled 'Don't Confound Yourself: Causality from Biased Data'. The prize includes a very colour owl symbolizing the wisdom and creativity needed for an excellent dissertation. Congratulations, David!

Busy Beaver Award for 'Information-Theoretic Machine Learning'

On Monday October 13th, Jilles and David were awarded the Busy Beaver Award for the Seminar 'Information-Theoretic Machine Learning' that they taught together during the Winter Semester 2024/2025. Many thanks to the Student Representative Council of Computer Science at Saarland University, but all credits go to the participants of the seminar – Moritz, Ferdinand, Max, Yannic, Georgi, Tim, Jonas, and Léna – as without your engagement the course would never have been any good.

Two papers at NeurIPS

Sarah, Sascha, Janis and Nils all got papers accepted at this year NeurIPS conference. Sascha and Nils will present NeuRules, a continuous-optimizable and inherently interpretable neural architecture for learning rule lists in one go – that is, NeuRules jointly optimizes the discretizations (predicates), conjunctions (rules), and order (list) and so reliably obtains smaller and better rule lists than the state of the art. Sarah and Janis will present Causal Mixture Models, in which they show we can identify different structural causal equations per variable, as well as recover the membership of samples to these mechanisms with high accuracy. Congratulations to all!

One paper, two workshops

Rafa and Osman will present sGES on two continents. In Copenhagen, at the EurIPS Causality for Impact Workshop, and in San Diego, at the NeurIPS Causality for Science Workshop. Rafa developed sGES for her Bachelor's thesis, and uses smart sampling techniques to generalize Greedy Equivalence Search such that it works with non-equivalent scores. Congratulations to both!

Joscha Cueppers is now a Doctor of Engineering

Thursday, September 11th, 2025, Joscha Cueppers succesfully defended his Ph.D. thesis titled 'Discovering Actionable Insights from Event Sequences'. The promotion committee, consisting of Prof. Raimund Seidel, Alexandre Termier (Rennes, France), Matthijs van Leeuwen (Leiden, the Netherlands), and Jilles Vreeken, were highly impressed with the thesis, presentation, and discussion. They unanimously agreed that Joscha passed the requirements for the degree Doctor of Engineering with the distinction Magna Cum Laude. Congratulations, Dr. Cueppers!

Jawad starts as a PhD student

Warm welcome to Jawad Al Rahwanji as a new PhD student in the EDA group! Jawad recently finished his MSc thesis with us on the topic of discovering subgroups with exceptional survival characteristics. He is now starting his PhD with us, while being affiliated with the RTG on Neuro-Explicit Models. Jawad is equally interested in neural and symbolic approaches to data mining and machine learning for healthcare. Welcome, Jawad!

Praharsh starts as a PhD student

Warm welcome to Praharsh Nanavati as a new PhD student in the EDA group! Praharsh did an integrated BSc/MSc at IISER in Bhopal, working on different aspects of causal inference. He is now joining as a PhD student, while being affiliated with the RTG on Neuro-Explicit Models. Praharsh is interested in anything causal, and our first stop is likely going to be causal representation learning. Welcome, Praharsh!

Hendrik starts as a PhD student

Warm welcome to Hendrik Suhr as a new PhD student in the EDA group! Hendrik recently finished his MSc thesis on the topic of anomaly detection and characterization. He decided to stay and pursue a PhD with us too. Hendrik is broadly interested in interpretability, explainability, patterns, and continuous optimization, and we will likely work on many if not all of these. Welcome, Hendrik!

Osman Mian is now a Doctor of Engineering

Friday, February 28th, 2025, Osman Ali Mian succesfully defended his Ph.D. thesis titled 'Practically Applicable Causal Discovery'. The promotion committee, consisting of Profs. Raimund Seidel, Murat Kocaoglu, Isabel Valera, and Jilles Vreeken, were very impressed with the thesis, presentation, and discussion. They unanimously agreed that Osman passed the requirements for the degree Doctor of Engineering with the distinction Magna Cum Laude. Congratulations, Dr. Mian!

FlowChronicle wins the CoNEXT Community Contribution Award

Joscha and Adrien's paper presenting FlowChronicle for mining patterns from network flows and using these to generate realistic network data has won the CoNEXT'24 Community Contribution Award. The jury deemed FlowChronicle to be the paper that is most valuable for the network research community, which is quite the honour. Congratulations, guys!

David wins the Helmholtz AI Dissertation Award

Only days after defending his PhD, David Kaltenpoth was awarded the Helmholtz AI Dissertation Award 2024 for his Ph.D. thesis titled 'Don't Confound Yourself: Causality from Biased Data'. Congratulations, David!

Joscha and Sascha present Cascade in the snow

Joscha and Sascha presented their work not only to the 16.500 attendees of NeurIPS 2024, but taking the opportunity of being in Vancouver, they went the extra mile and climbed all the way up to spread the word on how to discover causal networks from event sequences from the top of Mount Whistler too. If that's not dedication to science, I don't know what is!

Three papers at AAAI 2025

Sarah, Lénaïg, Sebastian and Michael all had papers accepted for presentation at the 2025 AAAI International Conference on Artificial Intelligence (AAAI). Sarah and Lénaïg will present SpaceTime for causal discovery from non-stationary time series while at the same time detecting when these undergo a regime chance. Sebastian and Michael will present FedBMF, the first method for federated boolean matrix factorization. Sebastian and Iiro will present sSBM for discovering shared stochastic block models between graphs. Congratulations to all!

David Kaltenpoth is now a Doctor of Natural Sciences

Monday, November 25th, 2024, David Kaltenpoth succesfully defended his Ph.D. thesis titled 'Don't Confound Yourself: Causality from Biased Data'. The promotion committee, consisting of Profs. Kun Zhang, Gerhard Weikum, Isabel Valera, and Jilles Vreeken, were deeply impressed with the thesis, presentation, and discussion and unanimously agreed that David passed the requirements for the degree Doctor of Natural Sciences with the distinction Summa Cum Laude. Congratulations, Dr. Kaltenpoth!

Luis differentiably learns the rules

In his Master's thesis, Luis developed a differentiably optimizable architecture for learning conditional dependencies, i.e. association rules, from very high dimensional data. In its essence, his proposal is an autoencoder where the hidden layer consists of paired neurons that together explicitly encode the antecedents and consequents of association rules \(X ightarrow Y\). Through extensive experiments, Luis shows that RuleNaps works very well in practice and the first ever association rule mining approach applicable on data of hundreds of thousands of features. Great work, Luis!

Cascade accepted at NeurIPS 2024

Joscha and Sascha will present Cascade at NeurIPS 2024. Cascade is a novel method to learn causal networks from event sequences in topological order. The core idea is to identify those events that, with a reliable delay, cause events in other variates. Cascade can identify instantaneous effects, and hence goes beyond Granger causality. Experiments show that it strongly outperforms the state of the art in terms of accuracy and robustness. You can find the paper and implementation here. Congratulations Joscha, Sascha, and Ahmed!

Anton finds the bias in the network

In his Bachelor thesis, Anton studied how to determing if there exists a bias in how a neural network processes information, and if so, where this exactly is encoded. To this end, he developed a new method based on ExplaiNN to discover flows, sets of connected rules, that together encode the information for a certain label. Anton developed metrics to quantify and visualization techniques to show how different these flows are, which parts cause bias, and lasg but not least, shows that we can adjust these by intervening on these neurons. Great work, Anton!

Boris Wiegand is now a Doctor of Engineering

Thursday, July 11th, 2024, Boris Wiegand succesfully defended his Ph.D. thesis titled 'Understanding, Predicting, Optimizing Business Processes using Data'. The promotion committee, consisting of Profs. Wil van der Aalst, Gerhard Weikum, Isabel Valera, and Jilles Vreeken, were very impressed with the thesis, presentation, and discussion and unanimously agreed that Boris passed the requirements for the degree Doctor of Engineering with the distinction Magna Cum Laude. Congratulations, Dr.-Ing. Wiegand!

Three papers at AAAI 2024

Joscha, Boris, Nils, Jonas and Paul all had papers accepted for presentation at the 2024 AAAI International Conference on Artificial Intelligence (AAAI). Joscha and Paul will present Hopper for discovering serial episodes with gap-models from event sequence data. Nils and Jonas will present DiffNaps, the first truly scalable method for discovering non-redundant sets of differential patterns. Last, but not least, Boris will present UrPils for discovering constraints from process logs. Congratulations to all!

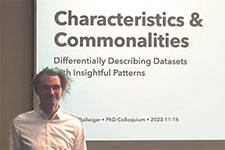

Sebastian Dalleiger is now a Doctor of Natural Sciences

Thursday, November 16th, 2023, Sebastian Dalleiger succesfully defended his Ph.D. thesis titled 'Characteristics and Commonalities – Differentially Describing Datasets with Insightful Patterns'. The promotion committee, consisting of Profs. Thomas Gaertner, Gerhard Weikum, Sven Rahmann, and Jilles Vreeken, were impressed with the thesis, presentation, and discussion and decided that Sebastian passed the requirements for a degree of Doctor of Natural Sciences with the distinction Magna Cum Laude. Congratulations, Dr. rer. nat. Dalleiger!

The EDA group on June 8th 2017 (colourized)

The EDA group on June 8th 2017 (colourized)